|

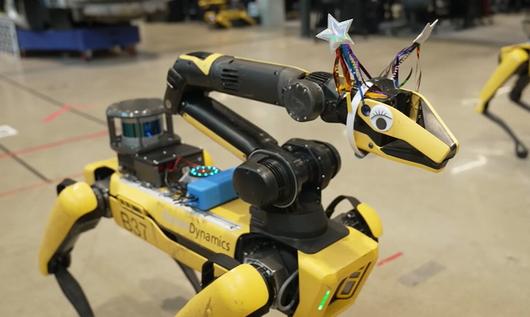

A robot tour guide using Spot integrated with Chat GPT and other AI models. [Courtesy of Boston Dynamics] |

<이미지를 클릭하시면 크게 보실 수 있습니다> |

Artificial intelligence (AI) is expanding its role in the real world beyond the domain of knowledge worker activities, such as text and image generation, and is taking over specific tasks in particular fields.

According to multiple industry sources, Boston Dynamics, a U.S. engineering and robotics design company, is experimenting with integrating ChatGPT application programming interface (API) into its robotic dog Spot, making it a tour guide by attaching a speaker to the robot and converting text into speech. Moving the gripper also gives the impression that the robot is speaking.

Matt Klingensmith, the principal software engineer at Boston Dynamics, said that the company provided a script to the robot and combined it with cameras. It is designed to perceive its surroundings and answer questions, he added.

The robots are suitable for internal tour purposes as they are already familiar with the surroundings, and visitors need only to follow them.

A research team led by Professor Shim Hyun-chul of the Korea Advanced Institute of Science and Technology (KAIST) has embarked on developing a humanoid pilot via robots without completely converting the entire aircraft into autonomous operation.

Pibot has jointed arms and fingers that move the flight control device and monitors the aircraft’s internal and external conditions via cameras during operation. It is 165 centimeters tall and weighs around 65 kilograms, made possible by combining AI with a physical robot. Pibot reads and understands flight manuals in the form of computer files, then sits in the cockpit and operates the aircraft.

The robot is designed to perform the entire process, including starting, taxiing, takeoff, landing, and cruising, in a flight simulator.

The research team’s current goal is to control actual commercial aircraft. The robot pilot can respond immediately because it is integrated with ChatGPT, a large language model (LLM), and can extract information from flight control manuals and emergency procedures as soon as an incident occurs, finding the safest flight path.

The technological competition to apply hyper-scale AI to robotics for use in the actual physical world is fierce, with attempts to move beyond AI technology confined to digital spaces as well as inducing machines to perceive and act like humans.

This trend is possible due to the rapid advancement of AI models, with the AI robot market expected to show explosive growth moving forward. According to analysis firm NextMSC, the AI robot market is projected to grow at an annual average of 32.95 percent, reaching $184.7 billion in 2030 from $95.7 billion in 2021.

The race for dominance in the field of advanced robot brains is also intense, and just as the development of in-house LLMs became active after the emergence of ChatGPT, companies have started building their own AI models for robots.

Toyota Research Institute defined its training technique for enhancing robots via AI, which was disclosed in September 2023, as a large behavior model for robots. The institute announced that its self-developed LMB would teach robots 1,000 new skills by the end of 2024.

Google also unveiled the Robotics Transformer 2 (RT-2), an AI model for robots, in July 2023. It is an improved version of RT-1, which was introduced the year before, specializing in robots that can understand commands without programming or separate training.

With RT-1, engineers had to individually program tasks like picking up and moving objects or opening drawers. On the other hand, RT-2 learns technology on its own based on visual information on the internet, using images and text.

Nvidia Corp. has introduced Eureka, an AI agent that automatically generates algorithms to train robots. Combining the natural language capabilities of OpenAI’s LLM GPT-4 with reinforcement learning, it helps robots learn complex skills.

The company claims that robots equipped with Eureka can perform around 30 tasks, such as turning a pen with fingers like a human, opening draws and cabinets, throwing and catching a ball, and using scissors.

이 기사의 카테고리는 언론사의 분류를 따릅니다.

기사가 속한 카테고리는 언론사가 분류합니다.

언론사는 한 기사를 두 개 이상의 카테고리로 분류할 수 있습니다.

언론사는 한 기사를 두 개 이상의 카테고리로 분류할 수 있습니다.